Llama

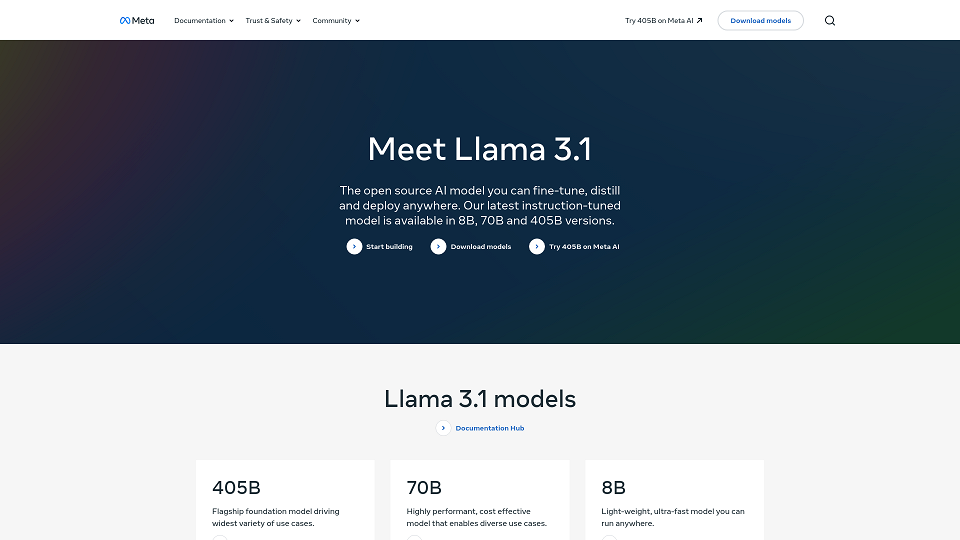

Llama 3.1

The open source AI model you can fine-tune, distill and deploy anywhere. Our latest models are available in 8B, 70B, and 405B variants.

Llama Introduction

Llama 3.1 is an open-source AI model developed by Meta, designed for fine-tuning, distillation, and deployment across various applications. This latest instruction-tuned model is available in 8B, 70B, and 405B versions, catering to a wide range of user needs and technical capabilities.

Llama 3.1 Models

Llama 3.1 offers a suite of models with varying strengths:

405B

This flagship foundation model is the most powerful, driving the widest range of use cases. It excels in complex tasks like tool use, multi-lingual agents, complex reasoning, and coding assistance. Examples include analyzing uploaded datasets, translating languages, solving reasoning problems, and generating code for maze creation.

70B

This model balances performance and cost-effectiveness, making it suitable for diverse use cases. It offers a compelling option for users who need high performance without the resource demands of the 405B model.

8B

This lightweight and ultra-fast model prioritizes efficiency and portability. Its small size allows it to run effectively on a variety of devices, making it ideal for users with limited computational resources or those needing quick responses.

Key Capabilities

Llama 3.1 empowers users to build advanced AI applications. Key capabilities include:

- Tool Use: Llama 3.1 can interact with external tools and datasets. For instance, it can analyze uploaded data, generate graphs, and fetch market data upon request.

- Multi-lingual Agents: The model exhibits proficiency in multiple languages. It can translate text, converse in different languages, and perform language-based tasks effectively.

- Complex Reasoning: Llama 3.1 demonstrates advanced reasoning abilities. It can solve complex problems, understand context, and provide insightful answers to intricate queries.

- Coding Assistants: The model can assist with coding tasks. It can generate code in various programming languages, debug existing code, and provide code suggestions.

Make Llama Your Own

Llama's open ecosystem allows for customization and integration with various services:

Inference

Users can choose between real-time and batch inference services. Downloadable model weights provide further cost optimization per token, giving users flexibility in managing their resources and budgets.

Fine-tune, Distill & Deploy

Llama 3.1 allows for model adaptation, improvement with synthetic data, and deployment options ranging from on-premise to cloud environments. This flexibility ensures Llama can be tailored to specific applications and integrated into existing workflows.

RAG & Tool Use

Leveraging Llama's system components, users can extend the model's capabilities through zero-shot tool use and Retrieval Augmented Generation (RAG). This enables the creation of agentic behaviors, allowing Llama to interact with and retrieve information from external sources, thereby enhancing its knowledge base and problem-solving abilities.

Synthetic Data Generation

The 405B model's ability to generate high-quality synthetic data allows for the improvement of specialized models. This is particularly beneficial for niche use cases where real-world data might be scarce. By generating synthetic data, users can train and refine their models for specific tasks, improving their accuracy and performance.

Model Pricing

Llama 3.1 offers a range of pricing options through various partners, including AWS, Azure, Databricks, and more. Pricing varies based on model size, usage (input/output tokens), and the chosen platform. This makes Llama accessible to a wide range of users, from individuals to large organizations, by providing flexible pricing structures that align with their specific needs and budget constraints.

Llama Frequently Asked Questions

What is Llama 3.1?

Llama 3.1 is an open-source AI model developed by Meta that you can fine-tune, distill, and deploy for various applications. It's available in 8B, 70B, and 405B variants.

What are the key differences between the 8B, 70B, and 405B versions?

The versions differ in size and capabilities. The 8B model is lightweight and fast, suitable for running on most devices. The 70B model offers a balance of performance and cost-effectiveness. The 405B model is the most powerful, designed for the widest range of use cases.

Can I try Llama 3.1 before downloading it?

Yes, you can try the 405B version on the Meta AI platform.

What are some examples of what I can do with Llama 3.1?

Llama 3.1 supports tool use (e.g., data analysis, graph plotting), multi-lingual agents (e.g., translation), complex reasoning, and coding assistance.

How can I fine-tune Llama 3.1 for my specific needs?

You can find resources and guides for fine-tuning, distillation, and deployment on the Llama website and GitHub repository.

Is there an ecosystem of partners or services built around Llama?

Yes, Llama has an open ecosystem with partners offering services like real-time inference, batch inference, fine-tuning, model evaluation, RAG, continual pre-training, safety guardrails, synthetic data generation, and distillation recipes.

How does Llama 3.1 perform compared to other models?

Llama 3.1 has been evaluated on over 150 benchmark datasets and shows strong performance across various categories, including general knowledge, code, math, reasoning, tool use, and multilingual tasks. You can find detailed evaluation results on the website and in the research paper.

How much does it cost to use Llama 3.1?

Llama 3.1 is open-source, but the cost of using it depends on how you access and deploy it. There are pricing details available for hosted inference APIs from partners like AWS, Azure, Databricks, Fireworks.ai, IBM, Octo.ai, Snowflake, and Together.AI.

Where can I find the latest updates and news about Llama?

You can stay up-to-date by subscribing to the Llama newsletter, following Meta's AI blog and newsroom, or checking the Llama website.

Are there any resources for learning more about responsible AI development and use?

Yes, Meta provides a Responsible Use Guide and emphasizes the importance of ethical considerations in AI development and deployment.

Where can I find support or connect with the Llama community?

You can access community support, resources, and connect with other users through the Llama website and GitHub repository.